- Home

- Publications

- Digital Methods of Formative Assessment for Learning

Digital Methods of Formative Assessment for Learning

How can technology help educators design formative assessments for their students? This was the question that Professor Diana Laurillard addressed during the NUS Teaching Masterclass Workshop held on 14 September 2017. Together with Professor Laurillard, faculty members across the University gathered to discuss the nature and forms of assessment, conventional versus digital methods of assessment and how to provide feedback digitally.

To illustrate the importance of harnessing the potential of digital formative assessments to spur productive learning, Professor Laurillard invited participants to analyse the productive thinking and learning elicited by two different digital methods designed to teach mathematical equations. In NumberBeads, the player puts together sets of beads with different numbers to form required sums whilst in ChoiceBeads, the player chooses between pre-set multiple choice questions (MCQs). It became apparent in the ensuing discussion that conceptual understanding of numbers was more likely to develop when the learning was experiential i.e. when students were allowed to investigate permutations and to achieve goals their own way, rather than being limited to choosing between ready-made options. However, participants also pointed out that developing such digital instruments (which were almost game-like) require much heavier investment.

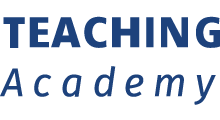

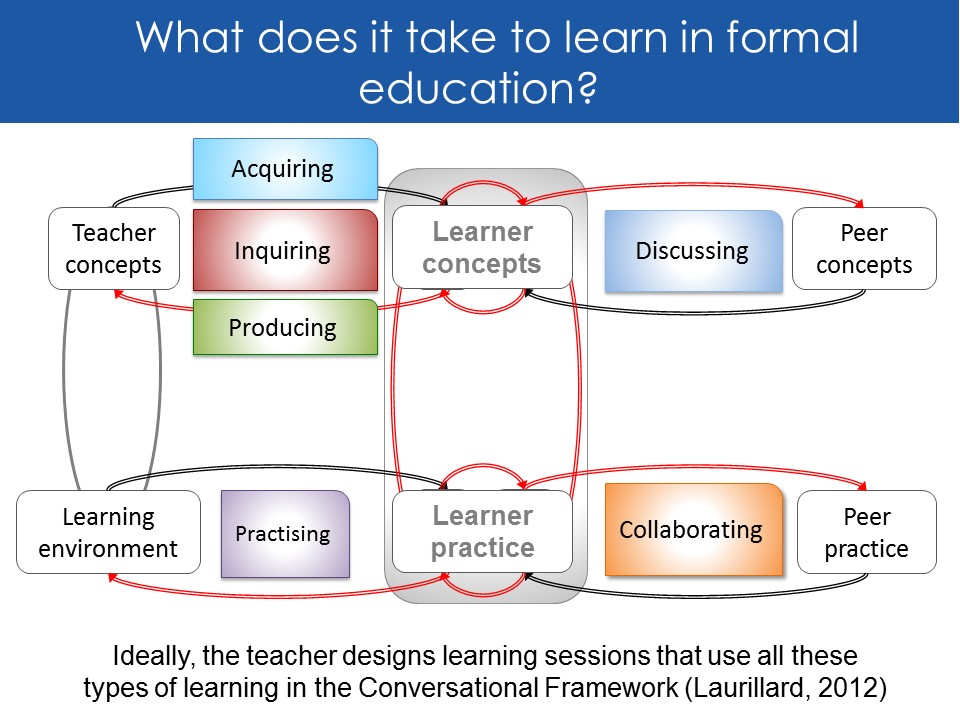

Positing that assessments determine what students have learnt by focusing on what they can produce, Professor Laurillard introduced the Conversational Framework (Laurillard 2012) as a means whereby to understand both the different kinds of learning in formal education, and the conventional products students can generate from these kinds of learning. (Please see Figures 1 and 2.) She then challenged participants to consider, critically, the digital equivalents of conventional assessment methods.

After discussion, participants pointed out that in some fields, digital assessments and learning methods have become the norm replacing traditional methods, often because they are able to provide more immediate feedback to students. For example, a Dentistry faculty member shared that students now practice their skills on digital simulations of teeth instead of plastic models, which gives them immediate feedback and allows them to rewind and redo activities. To that, Professor Laurillard added examples of students working on virtual welding models, as well as Art History students tasked to reassemble a digital collage of Picasso’s to gain first-hand understanding of Cubist theories. In all these cases, students could test out and apply theories in a hands-on manner within a safe environment, clarifying their understanding of concepts while working on interactive tasks. Such methods hence more fully capitalize on what digital technology enables.

Professor Laurillard then presented the following possible digital assessment methods for faculty to consider designing:

- Concealed Multiple Choice Question (CMCQ): To circumvent the inadequacy of personalised feedback in automated testing such as multiple-choice questions with limited preconfigured answers, Professor Laurillard introduced the CMCQ approach, where students would type in their own response to the question first. They could then be asked to select, from other students’ answers, the answer closest in meaning to their own. This would allow students to be active constructors of meaning and not passive selectors of limiting answers. Although currently functional examples of CMCQ were difficult to find, the CMCQ approach had potential to prompt more proactive student reflection.

- Vicarious Master Classes: To enable large numbers of students to learn vicariously from a few students’ authentic responses in practical activities that are challenging to computerise, small groups of students (who are reasonably representative of the larger student population) could be recorded offering responses and receiving individualized feedback. When posted online, many students could watch such recordings and evaluate other students’ responses for themselves and learn from the feedback given.

- Digitally-assisted Peer Review: To enable students to think through and refine their work, they could be made to read and critique other students’ answers after submitting their first drafts. After they ‘graded’ other students’ answers (which could comprise a range of good and poor drafts chosen by the tutor), they could review and revise their drafts before submitting to tutors for final evaluation. At the end of the exercise, the tutor could summarize the good and bad based on the best and worst answers. In concurrently giving and receiving feedback, students proactively extended and deepened their understanding of difficult concepts. A faculty member from Chemistry also shared that peer review led to an improvement in students’ appreciation of both their own and others’ competencies. On a larger scale, asking students to rank the best and worst answers could also allow a much larger group of students to go through the same evaluation and self-assessment learning processes.

After participants engaged in group discussions of digital ways to elicit more productive student thinking through required formative assessments, the session was concluded with the reminder that although the magical ingredient in the learning process was still physical presence which could not be replaced, digital approaches still had much potential to supplement traditional ones, and were also essential means for those who could not get their presence known and voices heard in person.