Assistive Communication Tools for the Sensory Impaired

Collaborators

This on-going project is in collaboration with SGEnable and Tote Board.

Overview

Tactile communication tools for visually impaired, auditory impaired and deafblind individuals through the technique of sensory substitution.

Key Problem(s)

Individuals with sensory impairments face many challenges in their daily lives that can prevent them from accessing information and maintaining a healthy level of independence, social interaction and well-being. For some individuals, this can be due to inaccessibility to caregivers or expensive assistive technology, while for other individuals the required assistive technology simply doesn’t exist. For deafblind individuals, the absence of both visual and auditory communication channels can prevent meaningful interactions with the people and world around them, leading many to suffer with both mental and social issues.

Objective

The objective of this project is to investigate the effectiveness of utilizing tactile stimuli as a form of feedback for sensory impaired individuals to enable greater communication and access to information.

For persons with disabilities:

- Experience increased psychological and emotional well-being

- Support for improved independent and functional skills in areas such as communication, personal navigation and situational awareness

- Provide access to information that supports informed decision making and planning

- Provide greater opportunities to participate in social, family, economic and community life

For caregivers:

- Feel more confident of their ability to provide competent care and support to persons with disabilities

Solution

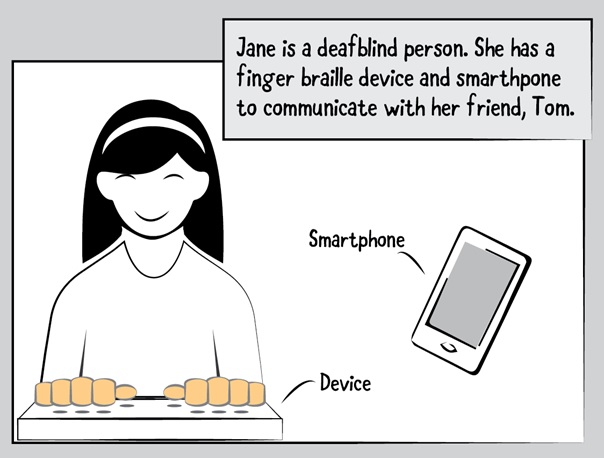

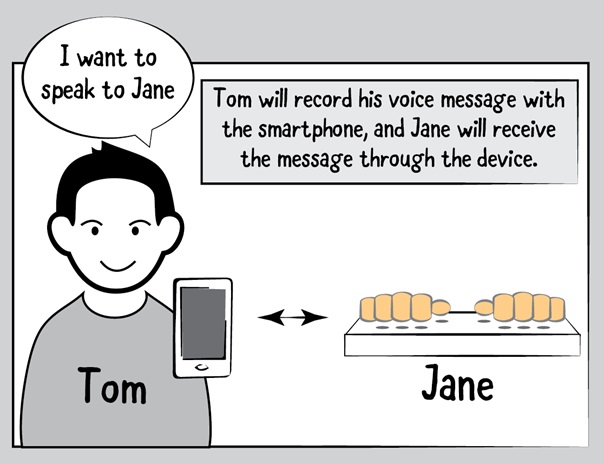

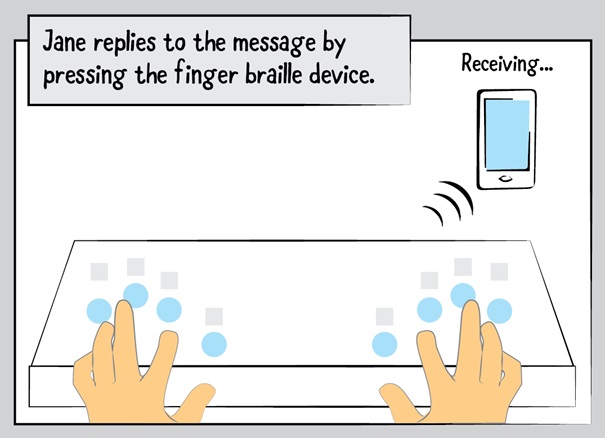

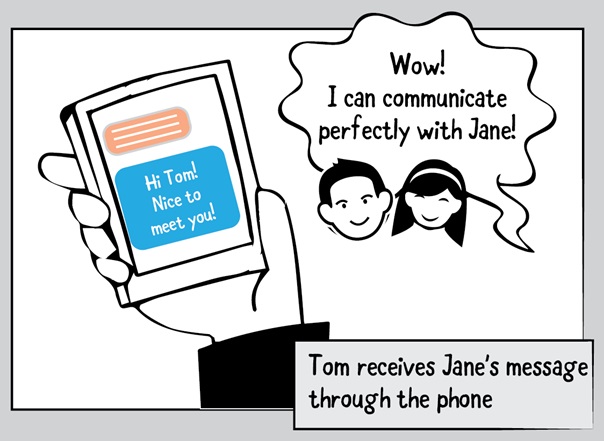

A novel technology platform that utilizes haptic stimuli to help support people with visual and auditory impairments. The proposed platform has two key components:

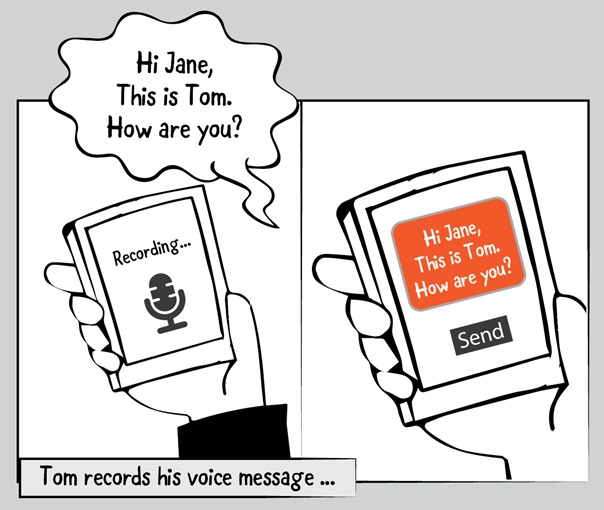

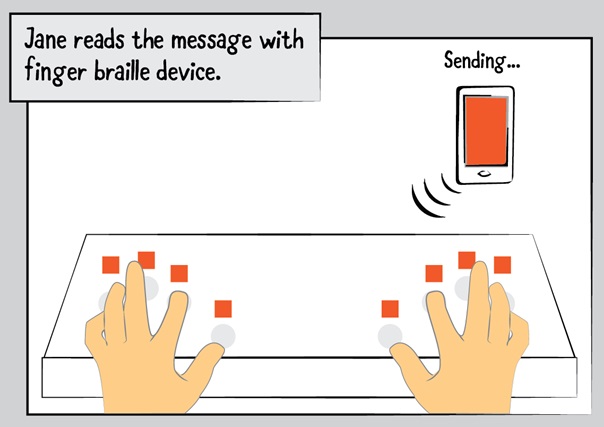

- A computer-mediated device that extends the Finger Braille technique

- A partnering mobile software application

In partnership with the device, the respective mobile software handles data and performs the conversion of data between different modal stimuli (including image-to-speech (auditory), image-to-braille (tactile), image-to-text (visual), speech-to-braille (tactile), speech-to-text (visual), braille-to-speech (auditory), and braille-to-text (visual). By combining these two components, this platform provides users with an interface that can be used to support effective communication and that can enable improved accessibility to other forms of information interaction.

Publications and Press

Publications:

Gallery

Project Contact

Yen Ching-Chiuan

didyc at nus.edu.sg

Team Members

Yen Ching-Chiuan

Felix Austin Lee

Pravar Jain

David Tolley

Tan Jun Yuan

Shienny Karwita

Barry Chew

Ankit Bansal